Synthetic Tests In a Nutshell🐿️ What You Need To Know About Synthetic Testing

While researching for this post, I came across a humorous definition of synthetic testing. So, let me explain what it means.

Synthetic Testing is like having a superhero tester. It's not a real person but a super-efficient, always-ready helper that checks if everything works perfectly before you and other real users start using apps or websites. They find problems early, so you have a smooth and hassle-free experience!

I almost concluded that I convinced you to integrate synthetic tests into your software testing lifecycle as soon as possible, right?

I came across this term, synthetic testing quite recently, more than a year ago, in the context of becoming familiar with DataDog. DataDog is a SaaS platform that integrates and automates infrastructure monitoring, application performance monitoring, log management, and real-user monitoring.

If you are already working as a software tester or are at least familiar with the software testing life cycle, you need to know that synthetic testing is a complementary type of testing and is also called proactive monitoring. It is also a way to identify performance issues with key user journeys by simulating real user traffic.

What are the benefits of Synthetic Testing?

- Early detection of issues - synthetic tests can identify potential problems in an application or system before real users encounter them. This early detection allows teams to address issues proactively.

- Continuous monitoring - synthetic tests can be scheduled to run at regular intervals, providing continuous monitoring of the application's performance. This helps in identifying and addressing issues promptly.

- Isolation of components - synthetic tests can focus on specific components or functionalities, allowing teams to isolate and test individual parts of a system.

- Offers baseline performance metrics - by regularly running synthetic tests, you can establish baseline performance metrics. These benchmarks provide a standard for comparison, making identifying deviations or performance regressions easier.

- Metrics - by running synthetic tests, you have access to a lot of metrics, such as step duration or LCP (Large Contentful Paint), the render time of the largest image, or block text visible.

How do you start creating synthetic tests?

I am going to use DataDog as an example, as it is the only monitoring tool I am familiar with and with creating and using synthetic tests.

Creating synthetic tests involves simulating scenarios that mimic real-world interactions with your application, system, or network, so the first step that you need to do is to identify critical scenarios. Identify key user interactions or critical functionalities within your system or application as these are the scenarios you want to simulate.

Let's consider a simple example of a synthetic test for a web application and take Canva as a working example. Our objective would be to test the login functionality of the Canva web application to ensure users can log in successfully. Our test steps would be:

- Open the web browser.

- Click on the login button.

- Click on "Continue with email".

- Enter a valid email.

- Click on "Continue".

- Click the "Login" button.

- Verify that the user is redirected to the home page.

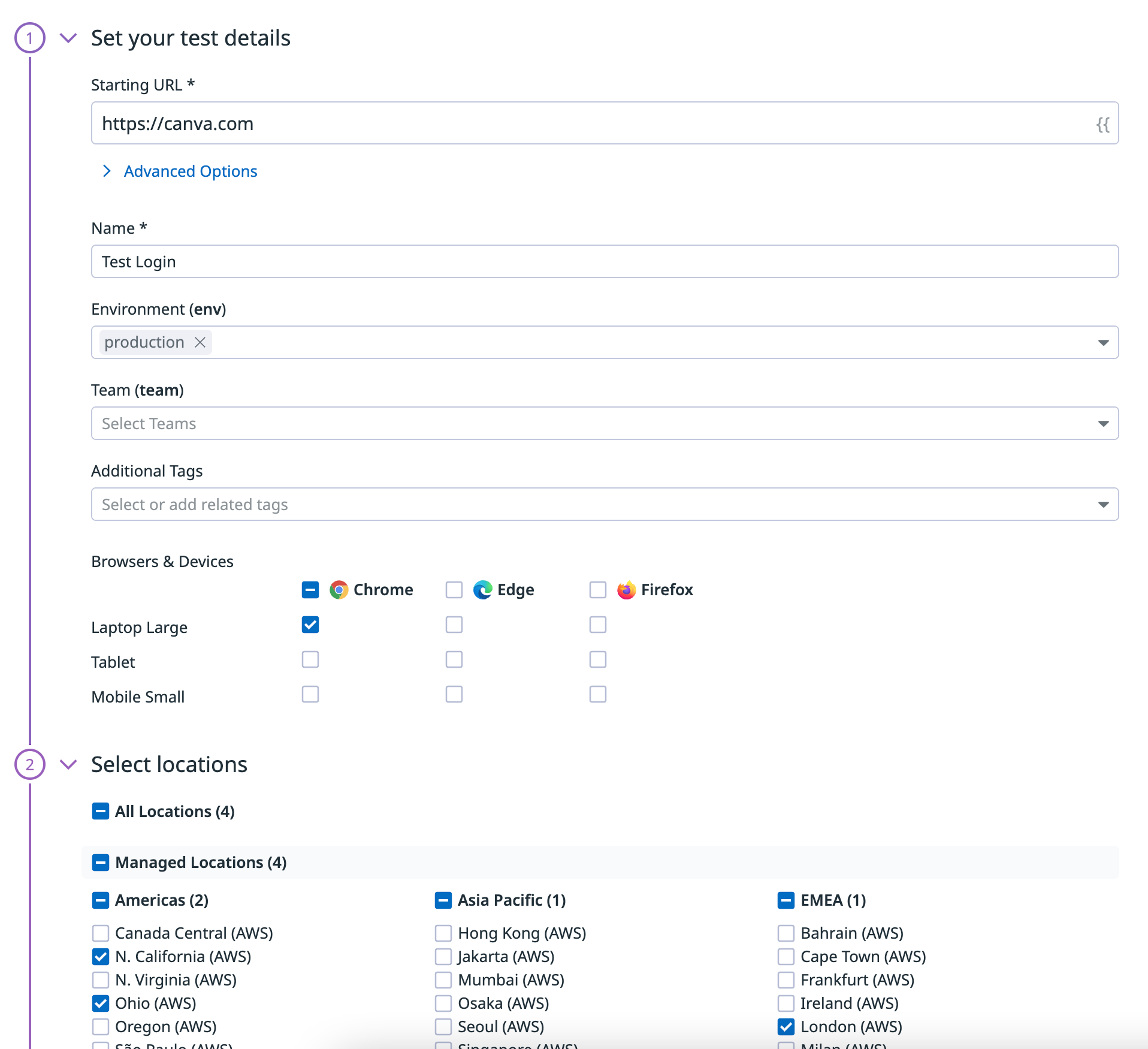

To simulate these steps, you have to create a new synthetic test and select the Browser Test type to run step-by-step recordings of your scenario in DataDog.

Once you have done this, you add the steps and set up the following:

- The environment where the synthetic tests should run, ideally Production so that you can have a quick input on what's happening there.

- Browsers and devices where the test should run.

- Locations from where the test should simulate that is running.

- Scheduling, and setting up how often the test should run to offer you a quick overview.

- Configure the monitor and select the channels where the notifications should go when a test is failing.

With the above example of a login test scenario, you can constantly simulate and check (every 15 minutes or how often you set it up in the Scheduling part) on the provided browsers and devices, from the specified locations, on the specified environment that the Canva login using a username and a password works successfully. Otherwise, you will be instantly notified on the channels that you provided.

For each step that runs, you can see the Duration for a particular step (how much time, milliseconds, or seconds are needed for that step to run), and a screenshot is added for every step that is run.

Continuous Integration

You can use the DataDog CI NPM package to run continuous testing tests directly within your CI/CD pipeline. You can automatically halt a build, block a deployment, and roll back a deployment when a synthetic browser test detects a regression.

Some of the disadvantages I see of using Synthetic Tests

- Limited realism - synthetic tests, by their nature, simulate user interactions and scenarios. However, they may not fully capture the complexity and variability of real user behavior. Real users can interact with applications in unexpected ways that synthetic tests may not cover. That's why I consider using synthetic tests complementary to other types of tests like end-to-end tests.

- Incomplete test coverage - creating comprehensive synthetic tests that cover all possible scenarios can be challenging. And expensive!

- Costs and resources - running numerous tests regularly may lead to increased costs and can be resource-intensive.

- False Positives and Negatives - incorrectly configured or overly sensitive assertions can contribute to these inaccuracies.

That's everything in a wrap about synthetic testing. As you can observe, synthetic testing plays a crucial role in maintaining the health and reliability of applications and systems by providing early insights, automating testing processes, and ensuring consistent performance under various conditions.

Have I convinced you to give them a try?

Happy testing, M.