How Google Analytics Can Help Assessing Risks In Software Testing

We are talking a lot about risks in software development in general, and much more in software testing. And this happens because testing often becomes constrained by time, resources or budget.

There have been lots and lots of talks and articles lately about how to identify the potential risks and then to find patterns, techniques and heuristics to mitigate with those risks.

Hard job, I know. It’s hard because we tend to go after the low hanging fruits and engage in bugs hunting and less in identifying the risks behind the bugs. And it’s hard because identifying the risks might require experience and good analytical skills and we don’t always have them in our teams.

These being said, want to ask you, my reader tester, a personal question.

How many times did you test an application or a functionality without having the end user in mind? Without trying to imagine how a real user will do what you’re trying to test.

Pause.

It’s fine, your secret is safe with me. It happened to me too. So no time for being ashamed.

Here it comes the role of Google Analytics, a free web analytics service, offered by Google. Its main purpose is to capture and offer information about traffic, users behaviour and actions and help you understand how the website you’re developing and testing is used by the end users. One of the biggest challenges for testers is that a website “needs to be supported on all the latest browsers, all operating systems, latest Android and iOS” and if you’ll do a matrix for this request, you’ll end up having tones of browser X OS and devices combinations. And only thinking how much time this will take for testing it will give you nightmares.

Google Analytics uses a block of Javascript code inserted into the pages and actions you want to collect data from and presents all this data in reports.

Collecting data from Google Analytics

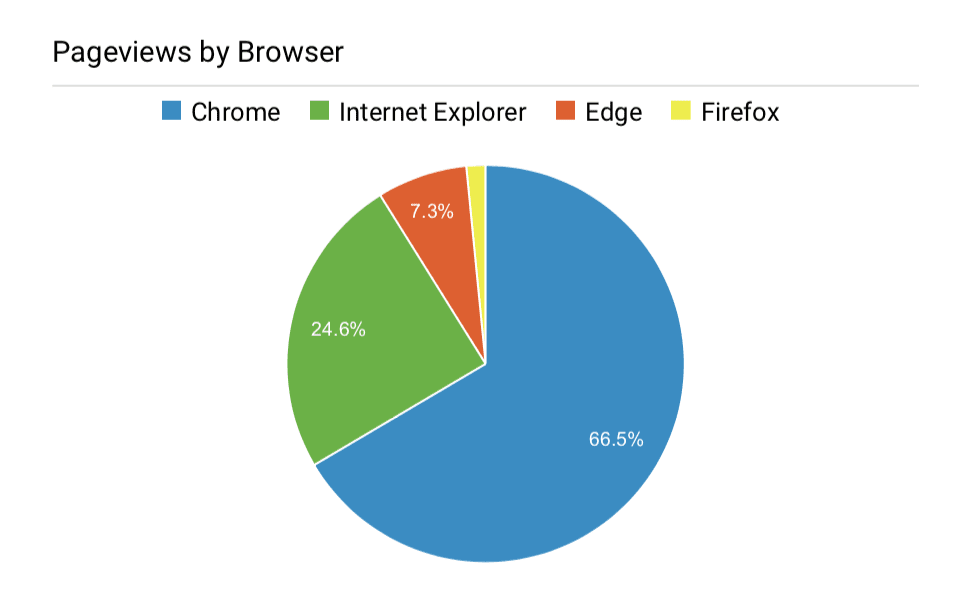

Part of our testing strategy was to focus more on Chrome and Firefox and then on Safari and Internet Explorer. When we started to analyse the data coming from Google Analytics we had a surprise:

This was not exactly matching our plan. The bad part was that we were severely underestimate the usage of Internet Explorer and Edge.

The action took was to adapt our browser testing strategy to match the potential risks raised by the GA report. Another step in tailoring our testing strategy was to have an automated regression pack to constantly run on Chrome and we used manual testing resourcing in testing the Internet Explorer and Edge. The numbers given by GA reports monitored over a longer period of time, can help you predict:

- when a browser needs to have a higher priority in testing;

- when a browser needs to be removed from the browsers matrix as obsolete;

- when a browser needs to be added to the browsers matrix;

Other data to be considered

You might find yourself in the situation when you don’t have historical data or knowledge on how the user scenarios are being created — for example when you are new in a company or on a project. Or maybe you don’t have yet the bigger picture of the business you’re trying to understand and to test.

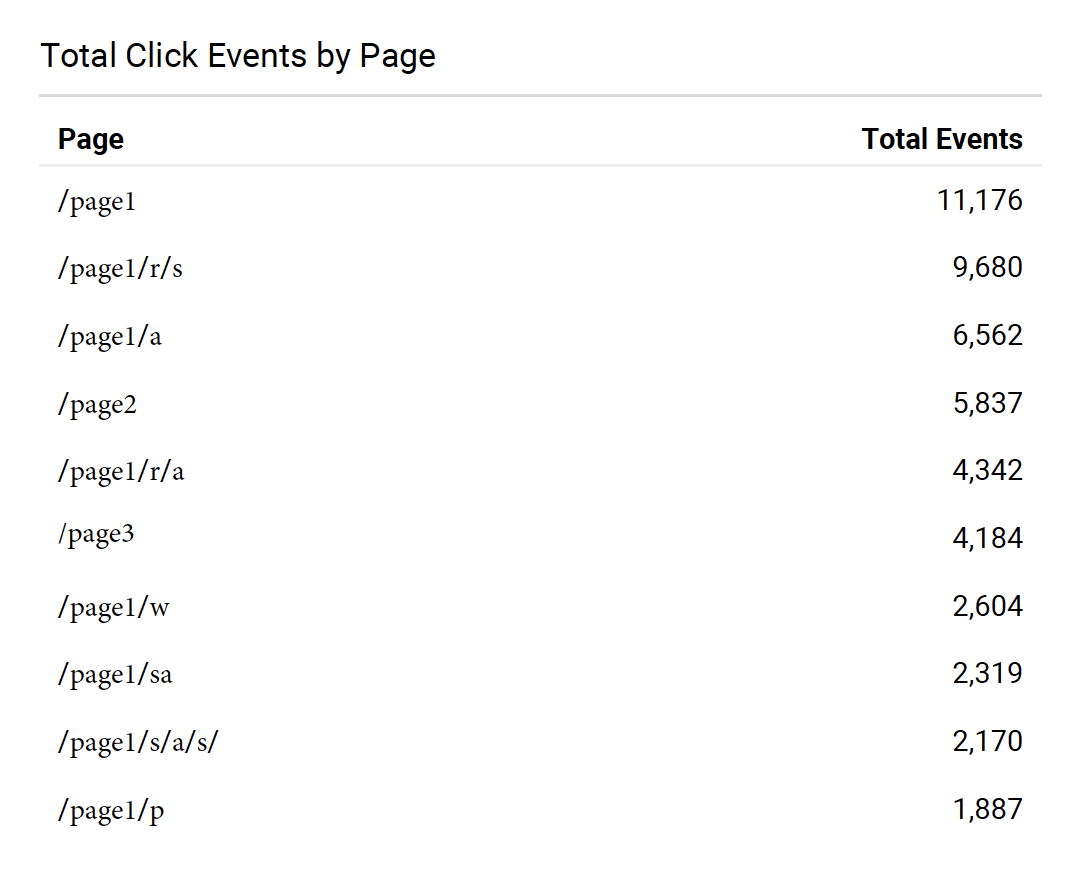

Analysing the total click events by page and combining it with other data coming from statistics such as most popular pages by page views, top click events, or average session duration can help you create a new point of view on your product and on the needs of your end users.

The method of analysing the Google Analytics statistics is working tight with the other aspects you’re taking into account when defining your testing strategy. The natural first step would be to identify your users, their common behaviours and making use of the data provided by Google Analytics reports can help you better evaluate the risks impacting your testing process.

The more accurate you define the browsers, operating systems, devices matrix and the user journeys, the less chances to bite you in the future.

Photo by rawpixel on Unsplash.